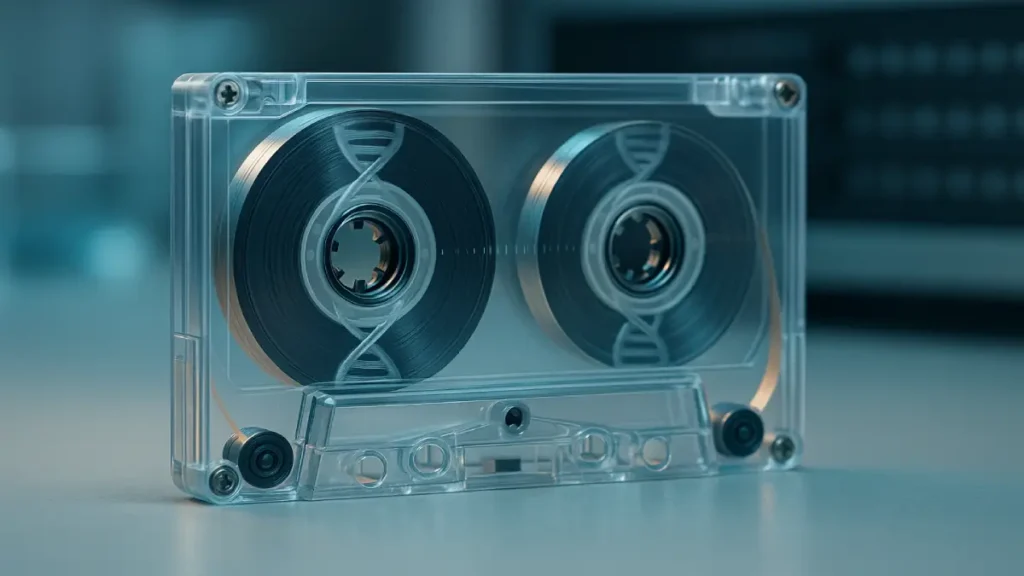

A new “DNA cassette”—a plastic tape embedded with synthetic DNA that stores up to 36 petabytes—has revived a bold idea: use biology to solve the world’s data deluge, with densities and lifetimes far beyond today’s silicon and magnetic media. But capacity headlines alone don’t make an infrastructure revolution; only a system-of-systems view—synthesis, encoding, access layers, preservation, standards, and market readiness—can show whether a DNA cassette could truly transform global storage.

What the DNA cassette is

A research team imprinted strands of synthetic DNA on a moving plastic tape and overlaid “barcodes” for addressable retrieval, creating a cartridge-like medium that looks retro but behaves like ultra-dense cold or warm storage, with a reported total capacity of about 36 PB per cassette. The tape integrates a protective “crystal armor” of zeolitic imidazolate frameworks to stabilize DNA bonds, aiming for century-scale durability with negligible standby energy. The architecture targets both archival (cold) and on-demand (warm) access tiers by combining high-density DNA payloads with physically navigable partitions that can be selected and sequenced.

Why density and durability matter

DNA packs data at near-atomic scale; one kilometer of the cassette tape reportedly holds up to 28.6 mg of DNA, translating into multi-petabyte payloads that dwarf conventional tape reels per unit footprint. Unlike hard drives and NAND that require continuous refresh cycles, DNA’s stability—especially with protective matrices—promises multi-century retention in ambient conditions, slashing energy, migration, and e‑waste burdens for long-term archives. This aligns with a broader push from labs and startups to build greener archival substrates that decouple capacity growth from the power and cooling curves of silicon data centers.

The write path: synthesis as the bottleneck

Encoding bits into A‑T‑C‑G is straightforward; writing trillions of bases quickly and cheaply is the hard part. Today, DNA synthesis still drives cost and throughput limits, historically hundreds to thousands of dollars per megabyte, though emerging enzymatic and lithographic methods promise steep declines via massive parallelization and error‑tolerant codes. Analyses suggest that with photolithography and aggressive error-correction, marginal costs could approach competitiveness with tape under realistic yields, flipping synthesis quality requirements from “perfect” to “good enough plus codes.” Commercial efforts like Twist’s Atlas Data Storage spinout point to chip-based, high-throughput enzymatic pipelines designed explicitly for data, not biology, to cross the scale chasm.

The read path: access models and latency

Reading DNA is sequencing; integrating fast, selective retrieval is the second challenge the cassette tackles with “barcoded” partitions akin to shelf→book address spaces. This physical index plus molecular barcodes aims to shorten seek times relative to bulk pool retrieval, nudging DNA from deep cold archives toward warm-access tiers where on-demand reads matter. Even so, read/write latency remains orders of magnitude slower than electronic media, making DNA best suited to immutable archives, compliance vaults, media preservation, and AI corpus “cold lakes” rather than high‑QPS online workloads.

System integration: cassette mechanics meet biofoundry

A deployable DNA cassette system requires more than media; it needs an integrated supply chain of DNA writers, barcoding robots, sequencer bays, and environmental modules packaged like modern tape libraries. Hyperscalers already operate robotic tape silos; swapping in DNA cassettes would hinge on compatible robotics, clean handling, and cartridge standards that let facilities mix DNA alongside LTO in tiered HSM workflows. On the upstream side, biofoundries must deliver synthesis at datacenter volumes with calibrated error profiles tied to the system’s coding stack, not just to biological assays.

Economics and the path to parity

The near-term economics are mixed: DNA storage market size is small today and cost-per‑TB is not yet competitive with LTO or HDD for hot data, but maintenance and energy costs favor DNA over multi-decade horizons. As synthesis costs drop through parallelized chemistries and enzymatic methods, and as error-correcting codes tolerate lower-fidelity writing, models indicate potential parity or advantage for archival tiers, especially when factoring no-refresh lifetimes. Industry moves—new companies, spin‑outs, and public‑private R&D roadmaps—signal that commercialization efforts are aligning around end‑to‑end platforms rather than point inventions.

Standards, formats, and trust

For a DNA cassette to be more than a lab feat, the ecosystem needs standard encodings, metadata schemas, chain‑of‑custody practices, and physical cartridge specs to ensure interoperability and compliance. Medical and regulated archives are a prime early fit, but they will demand auditable ECC, verifiable integrity proofs, and policy frameworks for synthetic DNA use and cross-border storage. Large institutes piloting microchip DNA platforms underscore the need for open interfaces that let enterprises avoid vendor lock‑in across writers, readers, and libraries.

Environmental and second‑order effects

If even a fraction of cold storage migrates to DNA cassettes, datacenters could reduce energy draw and hardware churn by avoiding periodic media refresh and forklift upgrades, with knock‑on reductions in embodied carbon and e‑waste. That, in turn, shifts upstream demand: less reliance on rare materials in magnetic or solid-state media, and more on benign reagents, chip-foundry capacity for enzymatic synthesis, and recyclable polymers for cartridges. The real sustainability test will be end‑to‑end LCAs comparing DNA pipelines to LTO/NAND over 50–100 years, an analysis that early adopters should demand alongside pilot deployments.

What the cassette changes—and what it doesn’t

The cassette form factor reframes DNA storage as something operators already understand: spooled media with robotic handling, partitions, and barcodes, not just vials in a freezer. It doesn’t erase the core constraints—write speed, read latency, synthesis cost—but it productizes navigation and durability in a way that makes DNA plausible for warm/cold tiers instead of science projects. In practical terms, the earliest wins will likely be in cultural heritage, media archives, scientific datasets, and sovereign compliance vaults where long life, density, and energy savings outweigh latency.

What to watch in the next 24 months

- Write cost curves: enzymatic and photolithographic synthesis throughput vs. error profiles paired with new ECC schemes.

- Cartridge standards: open specs for cassette geometry, indexing, and sequencing bay interfaces that mirror LTO ecosystems.

- Pilot libraries: hyperscalers or national archives running mixed LTO/DNA racks with real SLAs for restore time and integrity.

- Regulatory clarity: guidance for synthetic DNA in data operations, biosecurity guardrails, and cross‑border movement of DNA-encoded information.