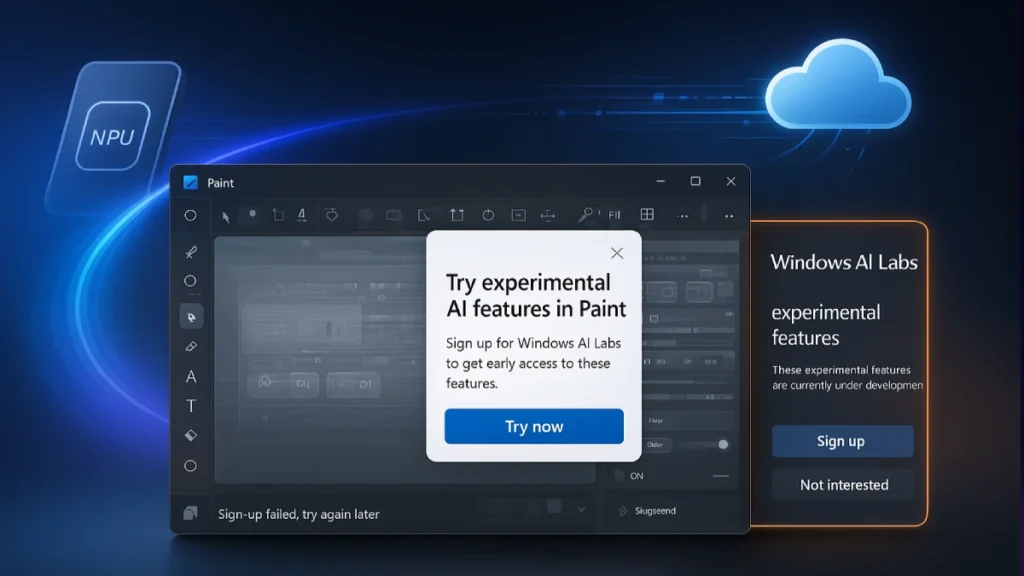

“Windows AI Labs” sounds like a front-row seat to the future of Windows 11—exclusive, early, thrilling. But peel the label and the program looks less like a perk and more like a structured funnel for user data, telemetry, and market validation across Windows’ AI roadmap. That’s not bad; it’s honest. The smart move isn’t to ask “How do I get in?” but “Why does this exist now, what am I really getting, and what could it cost in stability, privacy, and lock-in?”

What Windows AI Labs really optimizes for

Windows AI Labs is, by design, a proving ground for pre-release features inside core Windows apps—initially Paint—where Microsoft can flip features server-side, observe reaction at scale, and decide what graduates or dies. That’s a pipeline optimization, not a community perk. In practice, Labs is about accelerated product learning: condensed feedback cycles, feature gating, and segmented rollouts without bumping the main Windows Insider channels. The upside is faster iteration; the trade-off is that users become instrumentation. That means unstable UX, revoked features, and consent-by-click to experimentation.

The hidden cost of “early”

Early access often sounds like status; in operating systems it often means volatility. Expect:

- Feature fragility: AI toggles may appear and vanish, leaving projects mid-flow in limbo.

- Version drift: Dependencies across Paint, Photos, and Notepad can create mismatched states and ghost options when backends switch.

- Non-reproducible bugs: Server-side flags make bug reproduction hard, making support and community troubleshooting messy.

For creators, that could mean broken workflows and unplanned rework. For admins, unpredictable behavior on lab-enrolled machines increases support burden. For students, the risk is simple: the demo works on one PC and not another because the flag never rolled out.

Copilot+ gravity and hardware lock-ins

Windows AI is bifurcating along hardware lines: some features are cloud-accelerated, others require NPUs and Copilot+ PC-class devices for on-device AI. Expect Labs to become a filter that nudges users toward AI-capable hardware, especially as Microsoft tunes which features feel “snappy” only with NPUs. The testbed is not just for code—it’s for price elasticity and upgrade intent. If a feature feels magical only on Copilot+ PCs, Labs will have done invisible marketing via experience design.

Privacy and data shape the product (and vice versa)

AI features are telemetry-hungry because they must measure quality: prompt types, error rates, user edits after AI output, and opt-out behaviors. Consent dialogs aside, Labs participants will likely feed richer qualitative and quantitative data than typical Insiders. That’s useful for better models, but also increases the surface area for mishandled data, retention ambiguity, and cross-app profiling. The responsible stance for any Labs participant:

- Treat experimental features as public: don’t run proprietary material through them.

- Assume logs may include prompts, outputs, and error contexts.

- Favor on-device settings where available; verify what runs locally vs cloud.

The product thesis behind Labs

Why not just expand Windows Insider? Because Windows AI isn’t only OS; it’s the app layer and services layer. A separate funnel lets Microsoft:

- Gate cohorts by app usage, geography, and hardware to test nuanced hypotheses.

- Run A/B/C experiments across Paint, Notepad, Photos without Insider build fragmentation.

- De-risk headline features by observing sentiment curves before broad shipping.

This isolates AI iteration velocity from OS build cadence. It also aligns with a services-first Windows: features can be turned on by account, not just by build.

Who should actually join (and who shouldn’t)

Join if:

- The machine is non-critical and enrolled for testing.

- The goal is to influence AI-assisted creation features (Paint, Photos, Snipping Tool, Notepad).

- There’s tolerance for feature churn and the habit of filing actionable feedback.

Avoid if:

- The PC is for production work, education deadlines, or live demos.

- The environment is managed, compliance-bound, or has strict data handling rules.

- Predictability matters more than novelty.

A practical compromise: sandbox participation in a separate local profile or VM, or dedicate a secondary device. Keep creative assets versioned and exported to stable formats.

How to evaluate each experimental feature

Use a four-question rubric before adopting a Labs feature in real work:

- Is it local or cloud? If cloud, what happens offline and what data is sent?

- Is the output reversible? If it disappears tomorrow, can the work continue?

- Is there a non-AI equivalent workflow that’s only 20-30% slower?

- Does it introduce format lock-in? Prefer exportable, layered, or open formats.

If the answer to 2 or 4 is risky, ringfence the feature to experiments only.

The strategic upside for users

There is real value. Labs users can:

- Influence defaults early: UI labels, safety rails, and quality thresholds.

- Spot rough edges that matter to niche workflows (e.g., layer handling, mask fidelity, text rendering).

- Learn Windows’ AI affordances ahead of peers, informing team policy on what to enable, disable, or train.

Treat it as a learning program. Document findings, maintain a changelog of toggles and observed behavior, and share safe workflows with a community or team.

Forecast: where this goes in 12 months

- Expansion beyond Paint: Notepad, Photos, Snipping Tool, File Explorer “AI actions,” and perhaps Settings-driven AI helpers will likely appear in Labs-style gates.

- Tighter hardware pathways: Features that feel instantaneous on NPUs will likely remain “premium” to justify Copilot+ positioning.

- Account-scoped rollouts: Expect Microsoft account enrollment to matter more than Windows build versions for access, deepening Windows-as-a-service dynamics.

In short, Windows AI Labs is a smart move—for Microsoft. It can be a smart move for users too, if treated as a controlled experiment, not a free upgrade.