The reported $300 billion, five-year Oracle–OpenAI agreement is more than a massive cloud contract—it is an infrastructure blueprint that binds compute, power, chips, real estate, and financing into a single operating system for AI at industrial scale. If executed, it will alter cloud market dynamics, reshape energy planning, and force AI leaders to operate like utilities with long-horizon capacity commitments.

What Was Announced

The Contract Scope and Timeline

OpenAI reportedly agreed to purchase approximately $300 billion in compute capacity from Oracle over five years, with meaningful ramp beginning in 2027 and tied to large-scale data center buildouts. Coverage points to multi-gigawatt ambitions and staged delivery aligned with site readiness, power access, and hardware availability.

The Market Context and Significance

Reports frame this as one of the largest cloud commitments on record, coinciding with Oracle disclosures of substantial future contract value and rapid cloud infrastructure growth. The pact signals OpenAI’s strategic move toward multi-cloud resilience beyond prior reliance on a single hyperscaler.

The Compute Supply Chain

From Land to Latency: An End-to-End View

Scaling AI isn’t just racks and servers; it spans site acquisition, interconnect approvals, substations, turbines or transmission upgrades, transformers, cooling systems, accelerators, networking, and orchestration software. Each link has long lead times, regulatory complexity, and interdependencies that can bottleneck delivery.

Demand Signaling and Capacity Lock-In

A multi-year, multi-hundred-billion-dollar demand signal lets Oracle pre-commit to components, land, and long-lead gear, smoothing procurement in constrained markets like HBM, optics, and power equipment. The upside is predictable scaling; the downside is concentration risk if any single component slips.

Power Is the Hard Constraint

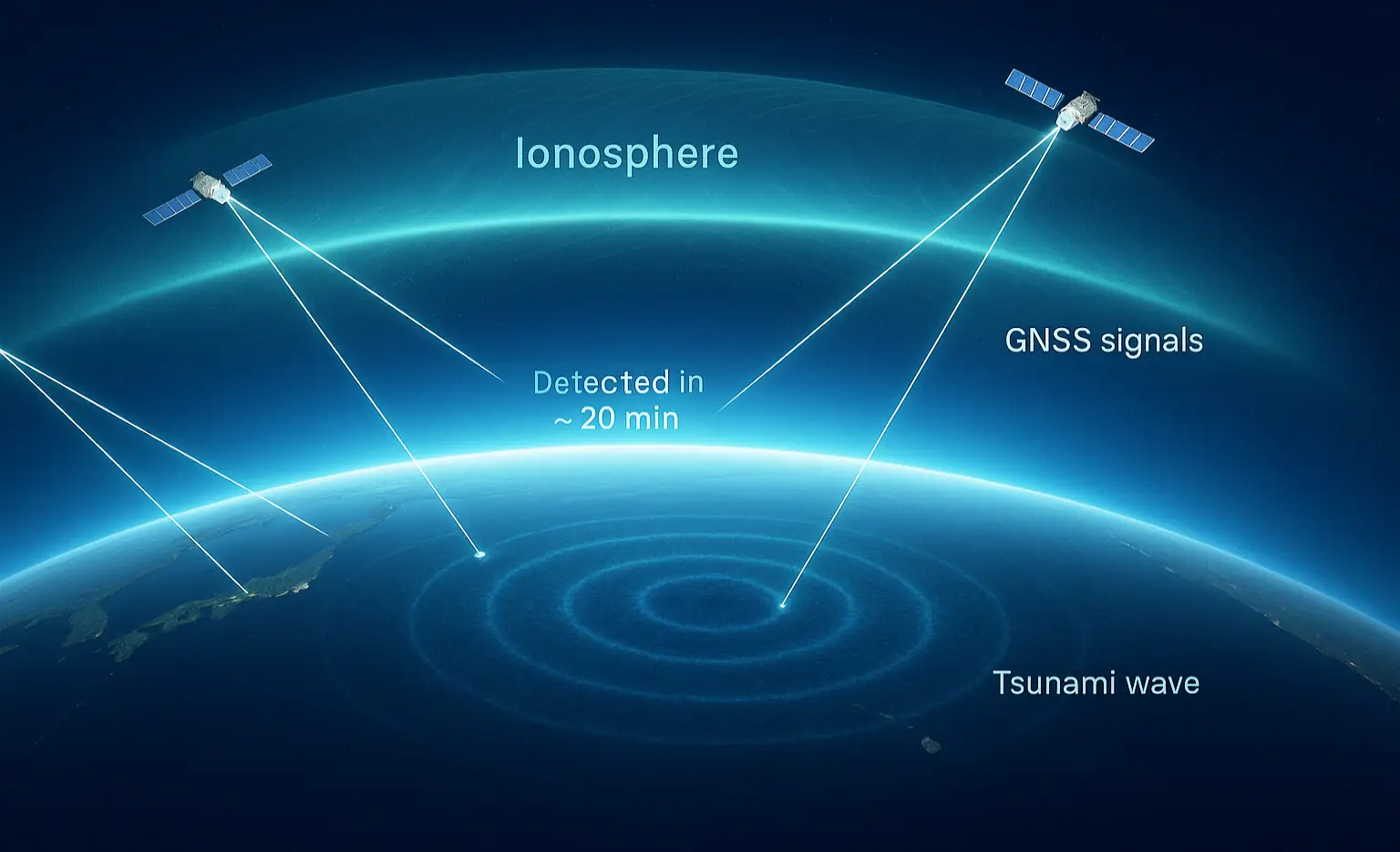

The Multi-Gigawatt Challenge

Securing and energizing on the order of several gigawatts—often likened to powering millions of homes—is the defining hurdle. Interconnection queues, transformer shortages, cooling-water limits, and permitting timelines can redefine when and where capacity goes live.

A Portfolio Approach to Siting

Expect a distributed campus strategy across multiple U.S. states and possibly international regions to balance grid capacity, policy friendliness, and latency needs. Partnerships for on-site or nearby generation, waste-heat reuse, and demand management will differentiate cost per token over time.

Chips, Custom Silicon, and Risk Hedging

Heterogeneous Fleets by Design

Hyperscalers blend today’s leading accelerators with tomorrow’s custom silicon to hedge price, supply, and performance. Software portability, compiler maturity, and framework support become as critical as TOPS, meaning ops teams must plan for mixed-architecture clusters.

Strategic Silicon Partnerships

Pursuit of custom ASICs with established chipmakers can reduce dependency on single vendors and tune hardware to model roadmaps. Long-term agreements across GPUs, HBM, advanced packaging, and optics mitigate shortages but require precise capacity forecasting.

Cloud Market Realignment

From Single-Cloud to Multi-Cloud

This deal codifies multi-cloud as a strategic necessity for AI-heavy workloads, balancing price, capacity guarantees, energy-secured regions, and compliance. It pressures incumbents to compete on energy-backed availability, training fabric performance, and sustained-usage economics.

Oracle’s Step-Change in Relevance

For Oracle, anchoring a flagship AI tenant elevates its position in the hyperscale league table and could reshape investor expectations. For competitors, the message is clear: future share will follow power, silicon, and networked training capacity—not just generic compute.

Financing and Durability Questions

Staged Commitments and Take-or-Pay Mechanics

A figure this large likely represents phased ramps tied to energization, hardware delivery, and utilization targets. Terms such as take-or-pay, price escalators, and performance SLAs will determine how risk is shared across market cycles.

Architectural Shifts and Efficiency Risk

Rapid gains in model efficiency, sparsity, or inference offloading could change capacity needs midstream. Contract flexibility, resellability of capacity, and modular campus designs will help absorb shifts without stranding capex.

Second-Order Effects

Energy Planning and Grid Modernization

AI campuses can anchor new transmission, spur substation builds, and catalyze policy changes around interconnect timelines. Local stakeholders and regulators effectively become supply chain partners.

Hardware and Packaging Acceleration

Clear, long-dated demand unlocks upstream investments in HBM, advanced packaging, optical interconnects, and liquid cooling. If suppliers scale in sync, future lead times compress; if not, bottlenecks persist.

Normalization of AI Multi-Cloud

Enterprises will treat AI compute like a portfolio, splitting workloads across providers to optimize cost, availability, and compliance. Tools for model portability, observability, and cross-cloud routing will move from nice-to-have to required.

What It Means for Builders

Compete on Tokens per Dollar and Watts per Token

Procurement and engineering will converge on throughput economics, prioritizing utilization, routing, and quantization strategies that translate directly to tokens per dollar and watts per token. Efficiency becomes a product feature, not just an ops metric.

Design for Heterogeneity and Portability

Assume mixed accelerators, evolving compilers, and multi-cloud deployment from day one. Teams that treat infrastructure as a synchronized industrial system—not a collection of servers—will capture the most value as capacity scales.